Scientific integrity, Open Science and reproducibility

Overview

Teaching: 60 min

Exercises: 60-90 minQuestions

What is Scientific integrity and what is the link to Open Science and reproducibility?

What is Open Science and which aspects are important to me?

What is reproducibility and why should I care about it?

Objectives

Understand the connections between scientific integrity, Open Science and reproducibility

Name the requirements on designing, carrying out and reporting of research projects such that scientific integrity is respected

Discrimate between so-called negative and positive results

List all/many of the dimensions of Open Science

Explain why and know where to preregister studies

Apply these concepts when reading about research

1. What is scientific integrity and what is the link to Open Science and reproducibility?

Scientific/research integrity at the University of Zurich

Often when the term “Scientific integrity” comes up one would think about topics such as

-

Misconduct/ fraud procedures

https://www.research.uzh.ch/de/procedures/integrity.html -

Ethical issues, especially regarding research on humans and on animals

https://www.uzh.ch/cmsssl/en/researchinnovation/ethics.html -

Conflicts of interest

https://www.uzh.ch/prof/apps/interessenbindungen/client/

Note that conflicts of interest can also be the subject of studies: https://doi.org/10.1186/s13643-020-01318-5

For each of these topics we have the University of Zurich links here, most other universities will also have corresponding regulations in place. But these topics are not the main interest of this course, we will instead focus at the aspects of research integrity discussed below.

National and international guidance documents on research integrity

Several guidance documents exist, see three European examples here:

-

Towards a Research Integrity Culture at Universities (LERU)

https://www.leru.org/publications/towards-a-research-integrity-culture-at-universities-from-recommendations-to-implementation -

The European Code of Conduct for Research Integrity

https://allea.org/code-of-conduct/ -

Scientific Integrity at the Swiss Academies of Arts and Sciences

https://akademien-schweiz.ch/en/uber-uns/kommissionen-und-arbeitsgruppen/wissenschaftliche-integritat/

⇒ We will have a brief look at each of the documents and work on the Swiss document in more detail.

LERU: Towards a research integrity culture at Universities

In a summary chapter the guidance document states what Universities should do to empower sound research:

Improve the design and conduct of research:

- statistics, research design, methodology and analysis

- newest standards

- understanding limitations

- checklists to improve design

Improve the soundness of reporting

- reporting guidelines

- pre-registration

- publish all components of experimental design.

- Value negative results and replication studies

⇒ The points in bold are topics of this course and directly related to reproducibility as we will see below and later.

European code of conduct for research integrity

The EU code states that Good research practices are based on fundamental principles of research integrity.

-

Reliability in ensuring the quality of research, reflected in the design, the methodology, the analysis and the use of resources.

-

Honesty in developing, undertaking, reviewing, reporting and communicating research in a transparent, fair, full and unbiased way.

-

Respect for colleagues, research participants, society, ecosystems, cultural heritage and the environment.

-

Accountability for the research from idea to publication, for its management and organisation, for training, supervision and mentoring, and for its wider impacts.

⇒ You will find these same main principles in the Swiss guidance document! Adhering to the principles of reliability, honesty and accountability requires, among other aspects, to work reproducibly and openly.

The Swiss code of conduct for scientific integrity

The same principles occur in the Swiss document, here with a direct pointer to reproducibility:

“Reliability, honesty, respect, and accountability are the basic principles of scientific integrity. They underpin the independence and credibility of science and its disciplines as well as the accountability and reproducibility of research findings and their acceptance by society. As a system operating according to specific rules, science has a responsibility to create the structures and an environment that foster scientific integrity.”

Quiz on the Swiss code of conduct for scientific integrity

For these questions, please read or search the Code until page 26.

Audience

At which of the following groups of people is the code of conduct aimed at?

- researchers at research performing institutions

- educators at higher education institutions

- administrative staff at research performing institutions

- students at higher education institutions

Solution

T researchers at research performing institutions

T educators at higher education institutions

F administrative staff at research performing institutions

T students at higher education institutions

Reliability

For reliability researchers need to use, e.g.,

- appropriate study designs

- the most current methods

- simple analysis methods

- transparent reporting

- traceable materials and data

Solution

T appropriate study designs

F the most current methods

F simple analysis methods

T transparent reporting

T traceable materials and data

Computer code

The code does not mention reproducible code (in the sense of computer code) directly. Find an implicit location where the use of reproducible code is implied by the standards of Chapter 4. Copy the entire bullet point or just the relevant verb.

Solution

The Code states “Researchers should design, undertake, analyse, document, and publish their research with care and with an awareness of their responsibility to society, the environment, and nature.” Using a scripting language for data analysis and providing the corresponding code hence caters to the “documenting” step.

Negative results

The non-publication of so-called negative results can be seen as a violation of scientific integrity. Find the behavior in Chapter 5 of the Code which this can be related to.

Solution

The Code lists “omitting or withholding data and data sources” as a behavior wich is an examples of scientific misconduct.

Example

Publication of negative results

Therapeutic fashion and publication bias: the case of anti-arrhythmic drugs in heart attack

- In the 1970s, it was found that the local anaesthetic drug lignocaine (lidocaine) suppressed arrhythmias after heart attacks

- That this claim was wrong was difficult to recognise from small clinical trials looking only at effects on arrhythmias, not outcomes that really matter, like deaths.

- Large clinical trials in the late 1980s showed that the drugs actually increased mortality.

- The results of Hampton and co-authors’ small but negative trial regarding the anti-arrhythmic agent lorcainide were not published because no journal was willing to do so at the time.

- A cumulative meta-analysis of previous anti-arrhythmic trials would have helped avoid tens of thousands of unnecessarily early deaths, even more so if results like those of Hampton and co-authors would have been available.

- With the words ‘publication bias’ in the title, the trial results could finally be published in the early 1990s:

Therapeutic fashion and publication bias: the case of anti-arrhythmic drugs in heart attackJ Hampton https://journals.sagepub.com/doi/10.1177/0141076815608562

Bottom line: This is a very impressive example of the consequences of non-publication of “negative” results. The authors themselves are not to blame, they have maintained their integrity as researchers. The example shows that the publication of all results is indeed a principle of research integrity in the sense of the integrity of the research record as a whole.

2. What is Open Science?

Let´s play the game “Open up your research”

https://www.openscience.uzh.ch/de/moreopenscience/game.html

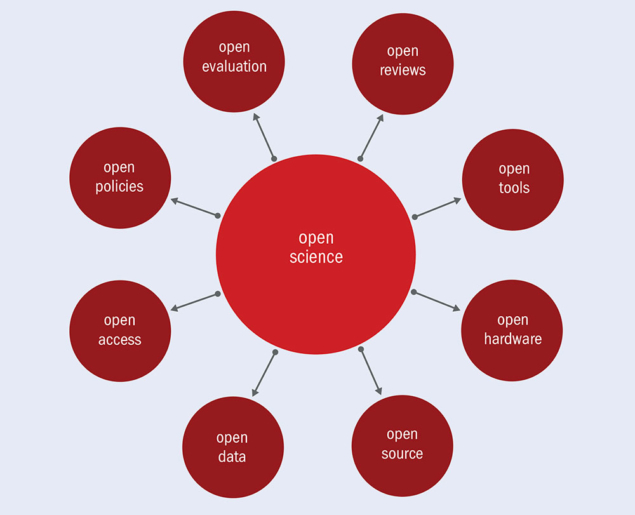

Dimensions of Open Science

Which decisions did Emma need to take in the game?

Solution

- Involve a librarian?

- Write a data management plan?

- Preregister her research plan?

- Make her data FAIR?

- Publish Open Access?

- Publish data and/or code?

UNESCO recommendation on Open Science

In 2021 UNESCO published their recommendations for Open Science. From their point of view Open Science is a tool helping to create a sustainable future. In the bold face part of the quote we see the link of Open Science to scientific integrity and also reproducibility:

“Building on the essential principles of academic freedom, research integrity and scientific excellence, open science sets a new paradigm that integrates into the scientific enterprise practices for reproducibility, transparency, sharing and collaboration resulting from the increased opening of scientific contents, tools and processes.”

Image credit: UNESCO Recommendation on Open Science, CC-BY-SA.

Image credit: UNESCO Recommendation on Open Science, CC-BY-SA.

Optional: Read the full recommendation text at https://en.unesco.org/science-sustainable-future/open-science/recommendation.

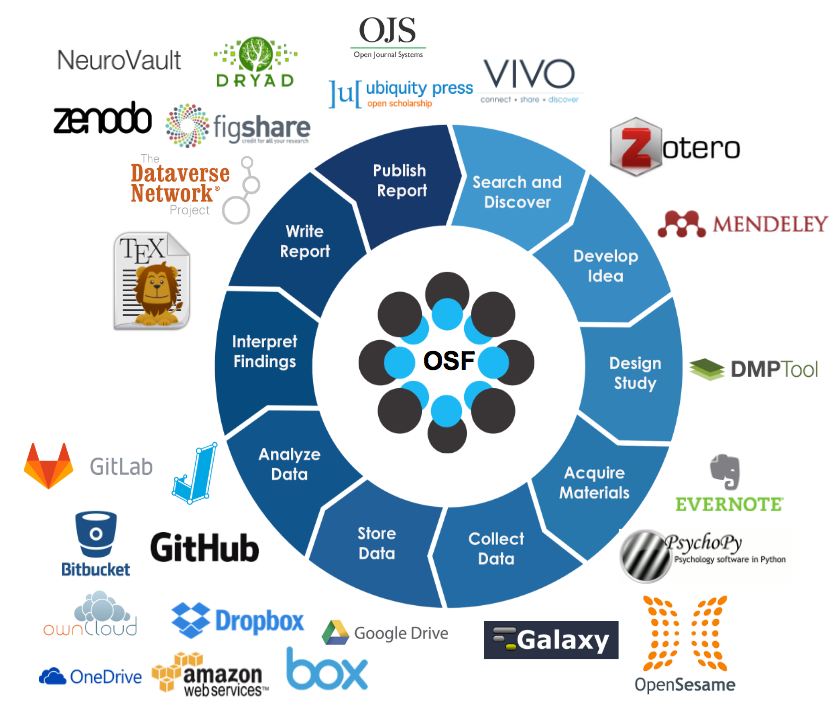

Open Science made easy by the Open Science in Psychology/Social Science initiatives

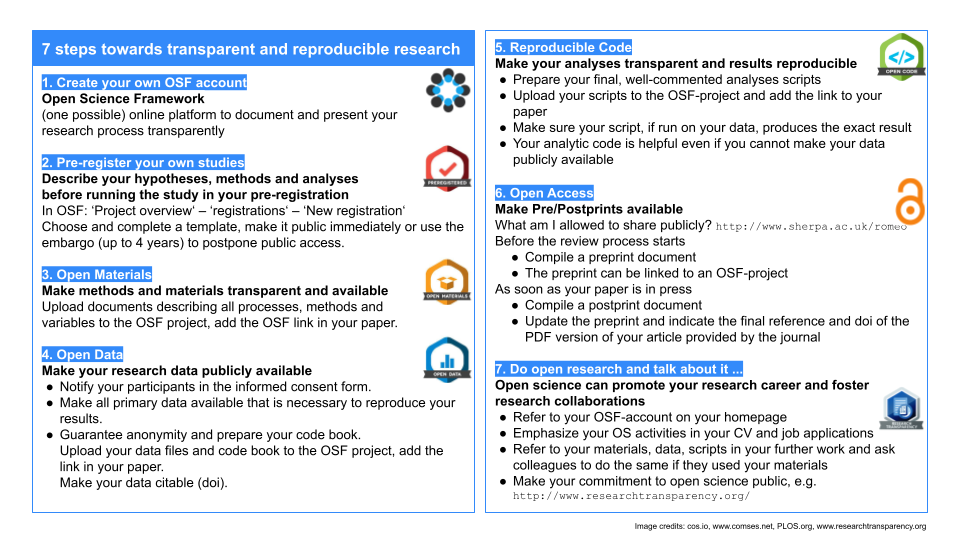

The Open Science in Psychology/Social Science initiatives summarize and explain the practice of Open Science in seven steps: https://osf.io/hktmf/. Some of these steps were also part of Emma’s decision process. Here we show an abbreviated version of the seven steps:

Image credit: Eva Furrer, unlicensed, abbreviated version of https://osf.io/hktmf/.

We will revisit the following steps in this lesson:

- Create OSF account (use easy infrastructure for collaboration)

- Pregregister your own studies

- Open Data

- Reproducible Code

- Open Access (preprints)

What is preregistration?

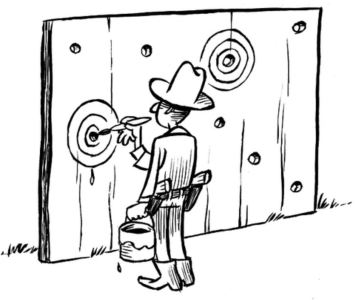

The Open up your research game and the seven steps above refer to preregistration. But what is preregistration? The Texas sharp shooter cartoon shows an unregistered experiment. The shooter first shoots and then draws the bull´s eyes around his shots. He did not preregister where he wanted to shoot before shooting.

Image credit: Illustration by Dirk-Jan Hoek, CC-BY.

When a researcher preregisters a study, the design and precise goal of the study are declared openly in advance: the bull´s eye is drawn.

Origins of preregistration: clinical trials

A clinical trial is an experiment involving human volunteers for example in the development of a new drug. Registration of clinical trials, i.e. announcing that a trial will be conducted and what its goal is before any data are collected, has become a standard since the late 1990s. It is considered a scientific, ethical and moral responsibility for all trials because:

- Informed decisions are difficult under publication bias and selective reporting, i.e. the non-publication negative results and the focus on publication of positive results which might not reflect the original goals. Publication bias and selective reporting result in a biased view of the situation.

- Describing clinical trials in progress simplifies identification of research gaps

- The early identification of potential problems contributes to improvements in the quality

⇒ The Declaration of Helsinki requires since the late 1990s: “Every clinical trial must be registered […]”

Registries (non-exhaustive list)

Here is a list of registries, where (pre)registration can be done:

Clinicaltrials.gov: US and international registry for clinical trials, first of its kind, established 1997: https://clinicaltrials.gov/

OSF: General purpose registry, also a research management tool (not just for preregistration), embargo possible for up to 4 years: https://osf.io/

Aspredicted: General purpose registry, protocols can be private forever, possibility to automatically delete an entry after 24 hours:

https://aspredicted.org/Preclinicaltirals.ed: Comprehensive listing of preclinical animal study protocols

https://preclinicaltrials.eu/PROSPERO International prospective register of systematic reviews

https://www.crd.york.ac.uk/prospero/

Quiz on registration

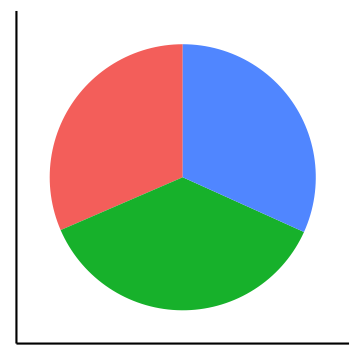

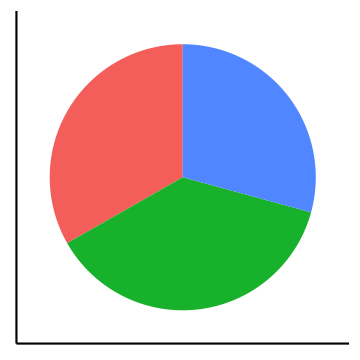

Does registration show an effect?

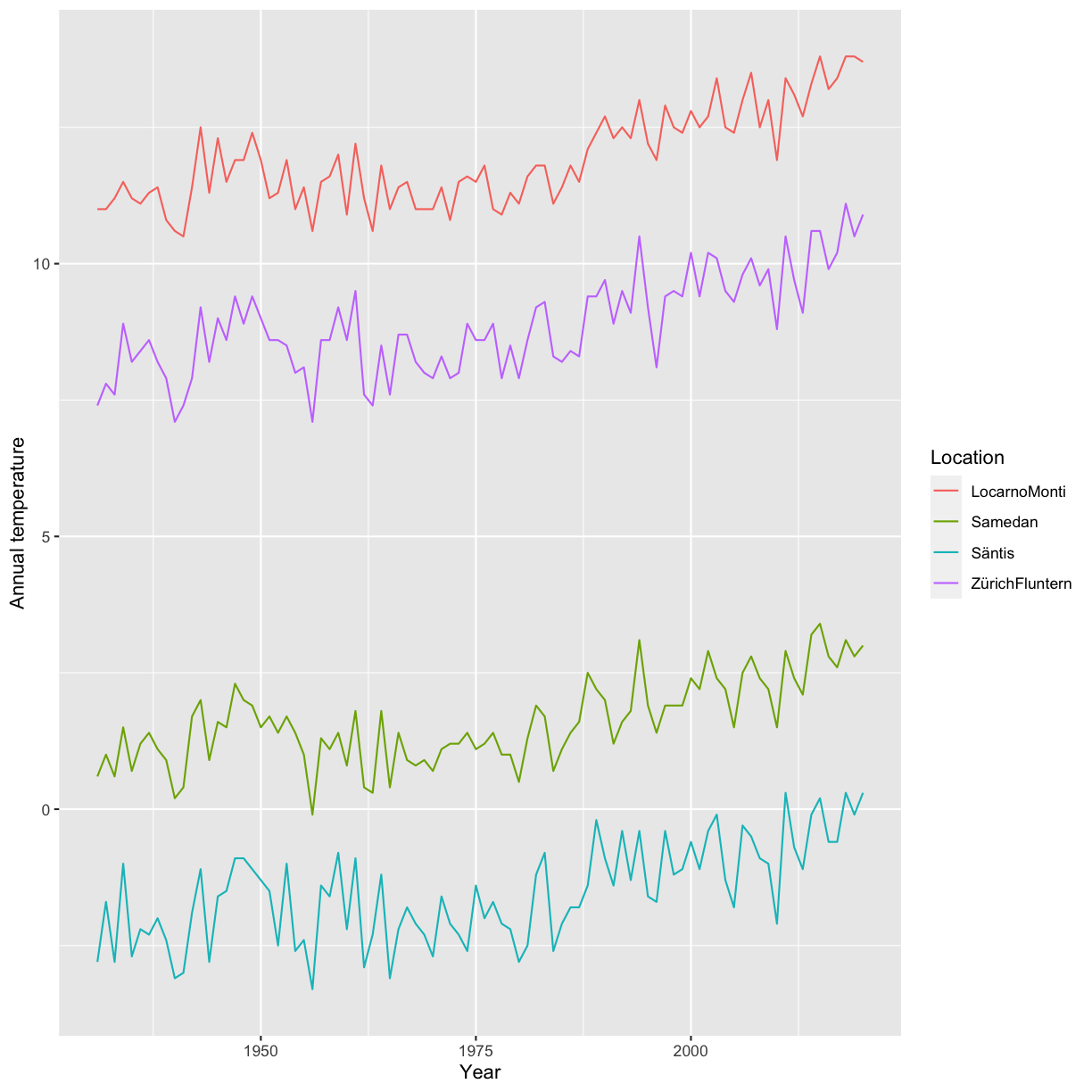

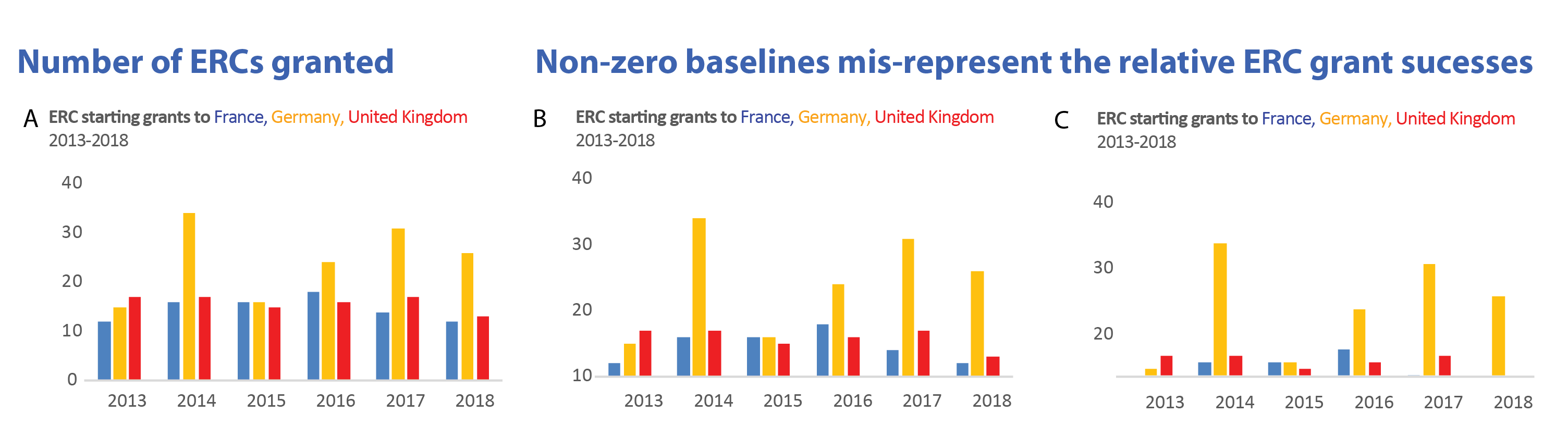

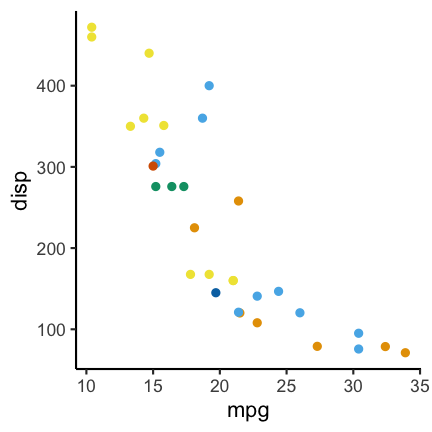

All large National Heart Lung, and Blood Institute (NHLBI) supported randomized controlled trials between 1970 and 2012 evaluating drugs or dietary supplements for the treatment or prevention of cardiovascular disease are shown with their reported outcome measure in the graphic. Trials were included if direct costs were bigger than 500,000$/year, participants were adult humans, and the primary outcome was cardiovascular risk, disease or death.

Image Credit: R Kaplan and V Irvin https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0132382, CC-BY.What is the difference between what you observe before and after the year 2000 in this graphic?

Solution

Before 2000 one sees many positive effects, i.e. treatments that lower the relative risk of cardiovascular disease, but also null effects, in general the effects are larger. After registration of the primary outcome becomes mandatory, less outcome switching can occur and many more null effects are reported. The policy change helped to overcome this particular aspect of selective reporting.

3. What is reproducibility?

Reproducibility vs replicability

Reproducibility refers to the ability of a researcher to duplicate the results of a prior study using the same materials as were used by the original investigator. This requires, at minimum, the sharing of data sets, relevant metadata, analytical code, and related software.

Replicability refers to the ability of a researcher to duplicate the results of a prior study if the same procedures are followed but new data are collected.

See S Goodman et al. https://www.science.org/doi/10.1126/scitranslmed.aaf5027 for a finer grained discussion of the concepts.

What is reproducibility?

“This is exactly how it seems when you try to figure out how authors got from a large and complex data set to a dense paper with lots of busy figures. Without access to the data and the analysis code, a miracle occurred. And there should be no miracles in science.”

See artwork by Sidney Harris at http://www.sciencecartoonsplus.com/ for an illustration of the remark “I think you should be more explicit here in step two” when a miracle occurs.

The quote is from F Markowetz https://genomebiology.biomedcentral.com/articles/10.1186/s13059-015-0850-7. In this publication the author asks what working reproducibly means for his daily work and comes up with “Five selfish reasons to work reproducibly”, this is even the title of the paper.

“Working transparently and reproducibly has a lot to do with empathy: put yourself into the shoes of one of your collaboration partners and ask yourself, would that person be able to access my data and make sense of my analyses. Learning the tools of the trade will require commitment and a massive investment of your time and energy. A priori it is not clear why the benefits of working reproducibly outweigh its costs.”

In this course we will learn about some of the tools Markowetz lists in his paper.

(Anti-)Example from the Markowetz paper

How bright promise in cancer testing fell apart

Image Credit: adapted from the open access article by K Baggerly and K Coombes https://projecteuclid.org/journals/annals-of-applied-statistics/volume-3/issue-4/Deriving-chemosensitivity-from-cell-lines–Forensic-bioinformatics-and-reproducible/10.1214/09-AOAS291.full.

From G Kolata https://www.nytimes.com/2011/07/08/health/research/08genes.html.

“When Juliet Jacobs found out she had lung cancer, she was terrified, but realized that her hope lay in getting the best treatment medicine could offer. So she got a second opinion, then a third. In February of 2010, she ended up at Duke University, where she entered a research study whose promise seemed stunning.

Doctors would assess her tumor cells, looking for gene patterns that would determine which drugs would best attack her particular cancer. She would not waste precious time with ineffective drugs or trial-and-error treatment. The Duke program — considered a breakthrough at the time — was the first fruit of the new genomics, a way of letting a cancer cell’s own genes reveal the cancer’s weaknesses.

But the research at Duke turned out to be wrong. Its gene-based tests proved worthless, and the research behind them was discredited. Ms. Jacobs died a few months after treatment, and her husband and other patients’ relatives have retained lawyers.”

Markowetz wonders in his paper why no one noticed these issues before it was too late. And he comes to the conclusion that the reason was that the data and analysis were not transparent and required forensic bioinformatics to untangle

Those forensic bioinformatics were provided by K Baggerly and K Coombes https://projecteuclid.org/journals/annals-of-applied-statistics/volume-3/issue-4/Deriving-chemosensitivity-from-cell-lines–Forensic-bioinformatics-and-reproducible/10.1214/09-AOAS291.full:

“Poor documentation hid an off-by-one indexing error affecting all genes reported, the inclusion of genes from other sources, including other arrays (the outliers), and a sensitive/resistant label reversal.”

Bottom line: Data analyses that are done using reproducible code and that are documented well are easier to check, for the analysts themselves and for others. Such practices decrease the chances that errors as in this example are made and this outweighs the effort and time they cost.

Episode challenge

A waste of 1000 research papers

Read the article “A Waste of 1000 Research Papers” by Ed Yong (The Atlantic, 27.5. 2019).

Question 1

Find situations in the article where publication bias, preregistration and data sharing could have aided to avoid such waste. Copy the corresponding lines from the article and name one or two reasons why you think that those concepts could have helped.

Question 2

Use smart search terms to find the concepts such that you do not need to read the entire research article.

Question 3

Go to the research article of Border et al. that is mentioned in Yong’s article and find out which of the above concepts have been respected in this article. Justify with citations.

Question 4

What are your overall conclusions?

Solution

No solution provided here.

Key Points

Scientific integrity, Open Science and reproducibility are connected.

All three themes are important for the trustworthiness of research results

The tools that will be taught in this course help to increase trustworthiness

First steps towards more reproducibility

Overview

Teaching: 60 min

Exercises: 90-120 minQuestions

Is there a reproducibility/replicability crisis?

How do I organize projects and software code to favor reproducibility?

How do I handle data in spreadsheets to favor reproducibility?

Objectives

Practice good habits in file and folder organization which favours reproducibiity

Practice good habits in data organization in spreadsheets which favour reproducibility

Some practical tips for the use of Rstudio (optional)

1. Is there a reproducibility/replicability crisis?

First, we will look at anecdotal and empirical evidence of issues with reproducibility/replicability in the scientific literature. Along the way we point to the pertinent of Markowetz’ five selfish reasons for working reproducibly. This episode hence gives some insight on the background and a few first practical tools for reproducible research practice.

Recall: Reproducibility vs Replicability

Reproducibility refers to the ability of a researcher to duplicate the results of a prior study using the same materials as were used by the original investigator. This requires, at minimum, the sharing of data sets, relevant metadata, analytical code, and related software.

Replicability refers to the ability of a researcher to duplicate the results of a prior study if the same procedures are followed but new data are collected.

See S Goodman et al.https://www.science.org/doi/10.1126/scitranslmed.aaf5027 for a finer grained discussion of the concepts.

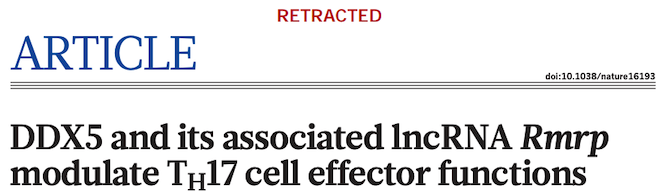

Retracted Nature publication

See this example of a publication, W Huang et al. https://www.nature.com/articles/s41586-018-0311-z, published in the prestigious journal Nature, that had issues with replication:

The publication contains an important finding on how a special type of RNA can affect T-cells, which could imply therapeutic effects and lead to new drugs for autoimmune diseases. But some of the co-authors were unable to replicate the published results and hence initiated a retraction. The retraction note states:

“In follow-up experiments to this article, we have been unable to replicate key aspects of the original results.”

For more information, see the commentary on Retraction Watch.

⇒ Replication may be an issue for other published research results, here the authors themselves discovered it. See below for some concerted efforts to study replicability of published scientific results.

Selfish reason number 5: reproducibility helps to build your reputation

“Generally, making your analyses available in this way will help you to build a reputation for being an honest and careful researcher. Should there ever be a problem with one of your papers, you will be in a very good position to defend yourself and to show that you reported everything in good faith.”

F Markowetz https://genomebiology.biomedcentral.com/articles/10.1186/s13059-015-0850-7

Issues with reproducibility/replication affect many fields

Is retraction due to error or non-feasibility of replication rare? Attempts at replication of results become more frequent. For example, in some fields there have been concerted efforts of aiming to replicate larger sets of studies allowing to assess reliability of results on a wider scale.

Biomedicine

F Prinz et al. attempted “To substantiate our incidental observations that published reports are frequently not reproducible with quantitative data, we performed an analysis of our early (target identification and validation) in-house projects in our strategic research fields of oncology, women’s health and cardiovascular diseases that were performed over the past 4 years.” From 67 studies only 22 were fully or partially confirmed, see https://www.nature.com/articles/nrd3439-c1.

Psychology

The Open Science Collaboration in Psychology “conducted replications of 100 experimental and correlational studies published in three psychology journals using high-powered designs and original materials when available.” in an attempt to “Estimating the reproducibility of psychological science”. Only 39 of 100 replication studies were significant and the mean effect size was about 50% compared to the original studies, see https://www.science.org/doi/10.1126/science.aac4716.

Economics

C Camerer et al. replicated “18 studies published in the American Economic Review and the Quarterly Journal of Economics between 2011 and 2014. All of these replications followed predefined analysis plans that were made publicly available beforehand, and they all have a statistical power of at least 90% to detect the original effect size at the 5% significance level.” They found significant effects in 11 of 18 studies with a mean effect size of about 66% compared to the original studies, see https://www.science.org/doi/10.1126/science.aaf0918.

Social Sciences

Again C Camerer et al. “replicate 21 systematically selected experimental studies in the social sciences published in Nature and Science between 2010 and 2015.” They found significant effects in 13 of 21 studies with mean effect size about 50% compared to the original studies, see https://www.nature.com/articles/s41562-018-0399-z.

Selfish reason number 1: reproducibility helps to avoid disaster

“This experience showed me two things. First of all, a project is more than a beautiful result. You need to record in detail how you got there. And second, starting to work reproducibly early on will save you time later. We wasted years of our and our collaborators’ time by not being able to reproduce our own results. All of this could have been avoided by keeping better track of how the data and analyses evolved over time.”

F Markowetz https://genomebiology.biomedcentral.com/articles/10.1186/s13059-015-0850-7

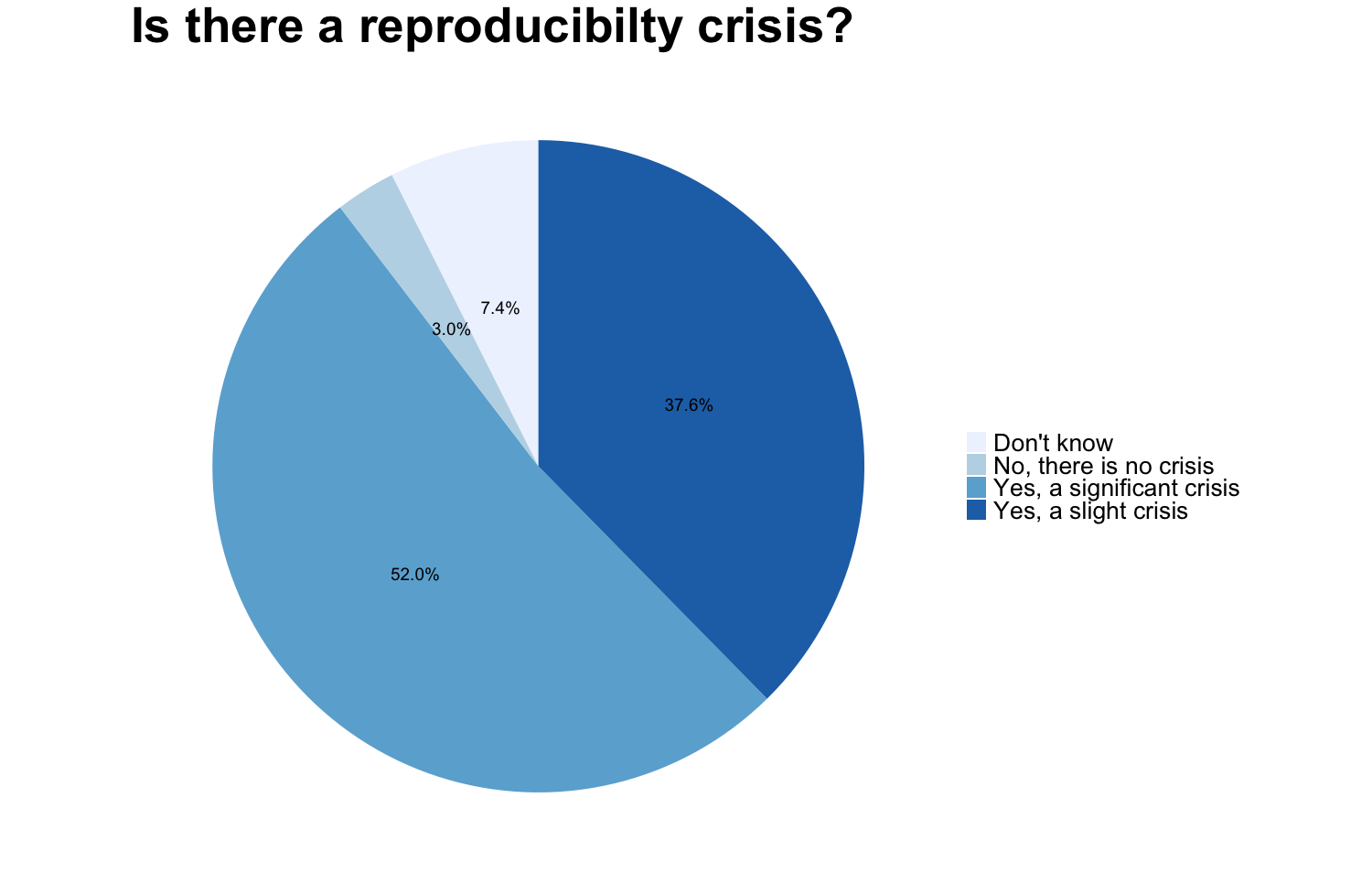

1,500 scientists lift the lid on reproducibility

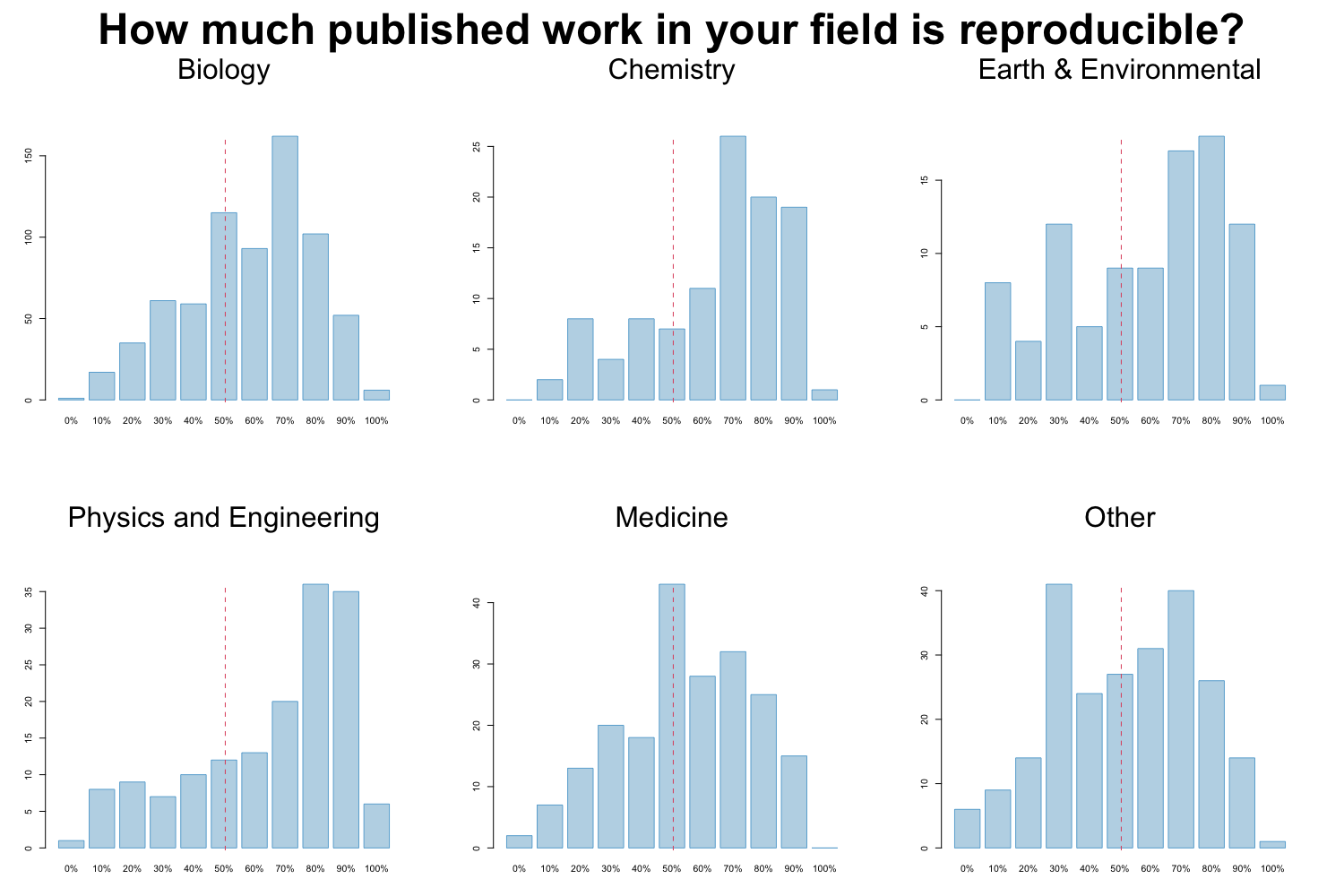

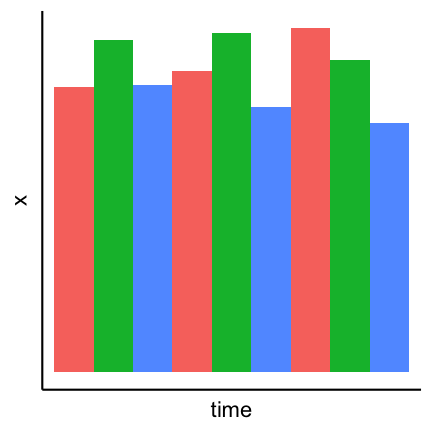

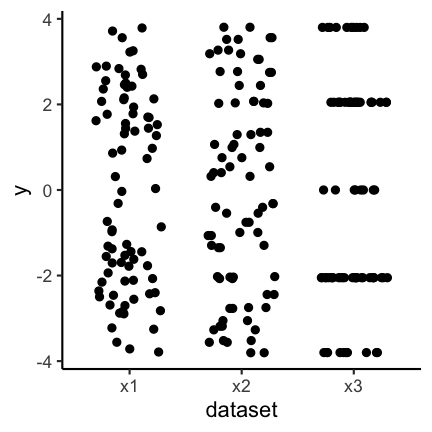

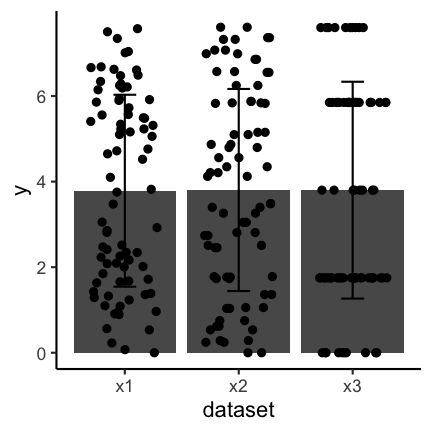

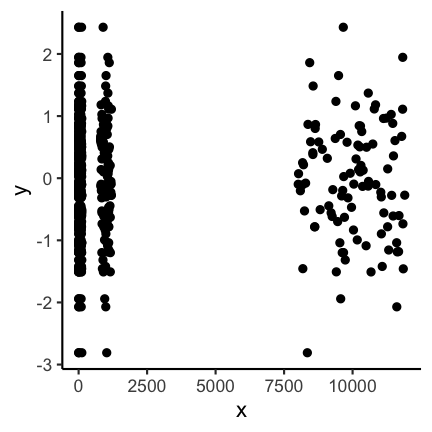

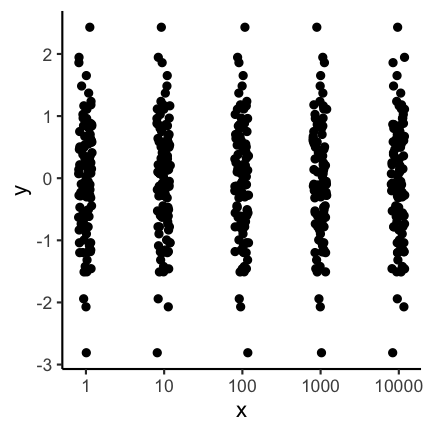

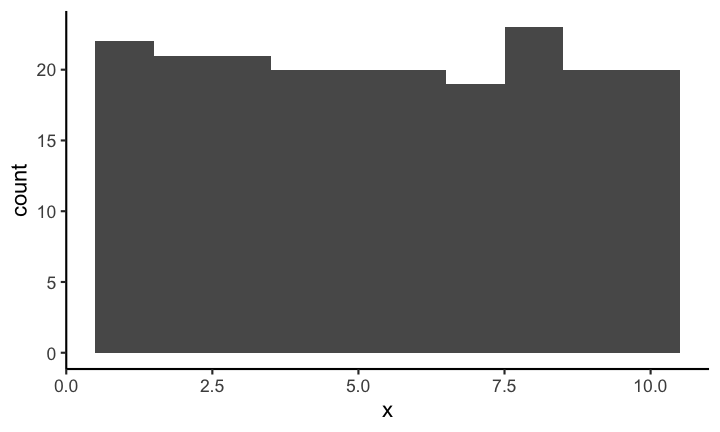

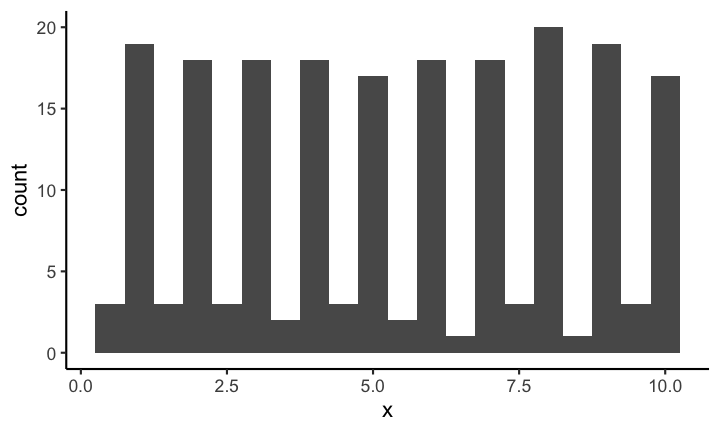

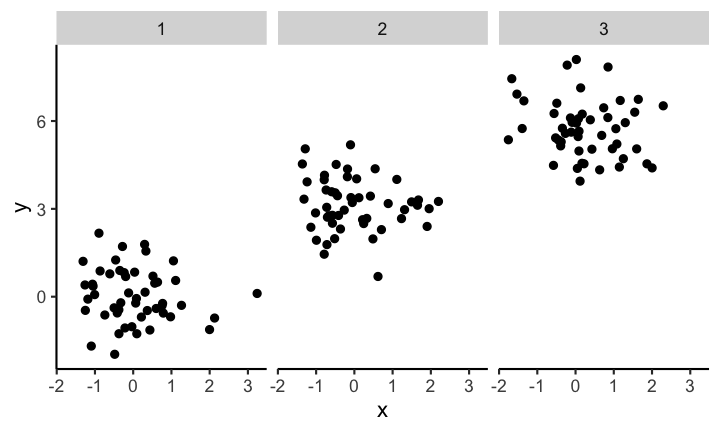

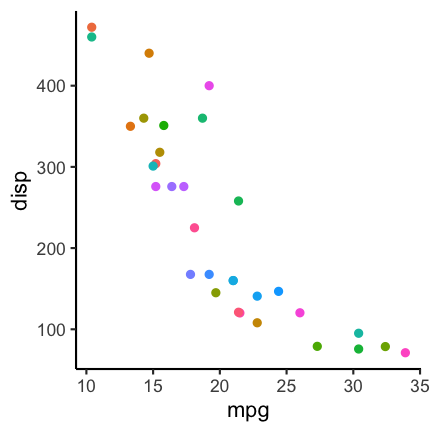

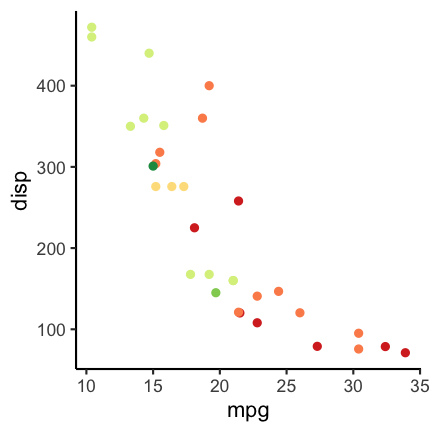

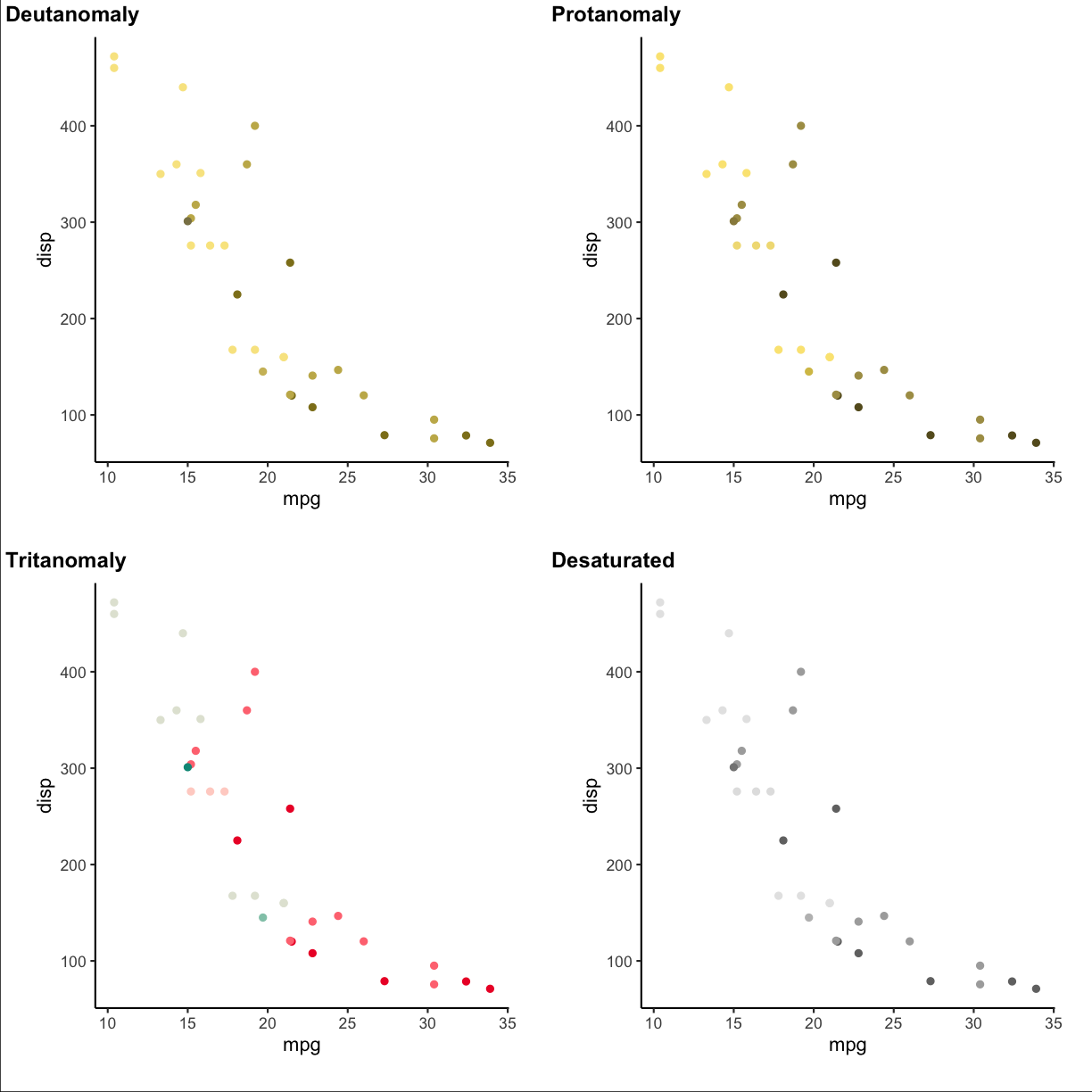

In 2016 M Baker designed a survey meant to shed “light on the ‘crisis’ rocking research.” Here we discuss some of the results of the survey, for a complete report see https://www.nature.com/articles/533452a. The two graphs reproduced from raw data of the publication show that a large proportion of researchers believes that there are issues with reproducibility but that, again in the opinion of researchers, the extent of the problem differs between disciplines. Specifically, researchers from the “hard” sciences such as chemistry and physics, more frequently believe that the published work in their field is reproducible than for example in the “softer” sciences biology and medicine.

Image credit: Figures are reproduced from https://www.nature.com/articles/533452a with the data available on Figshare

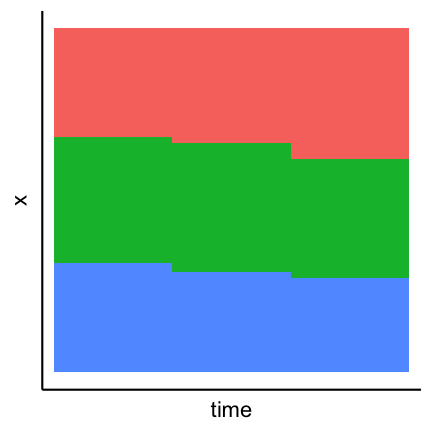

Factors contributing to irreproducible research

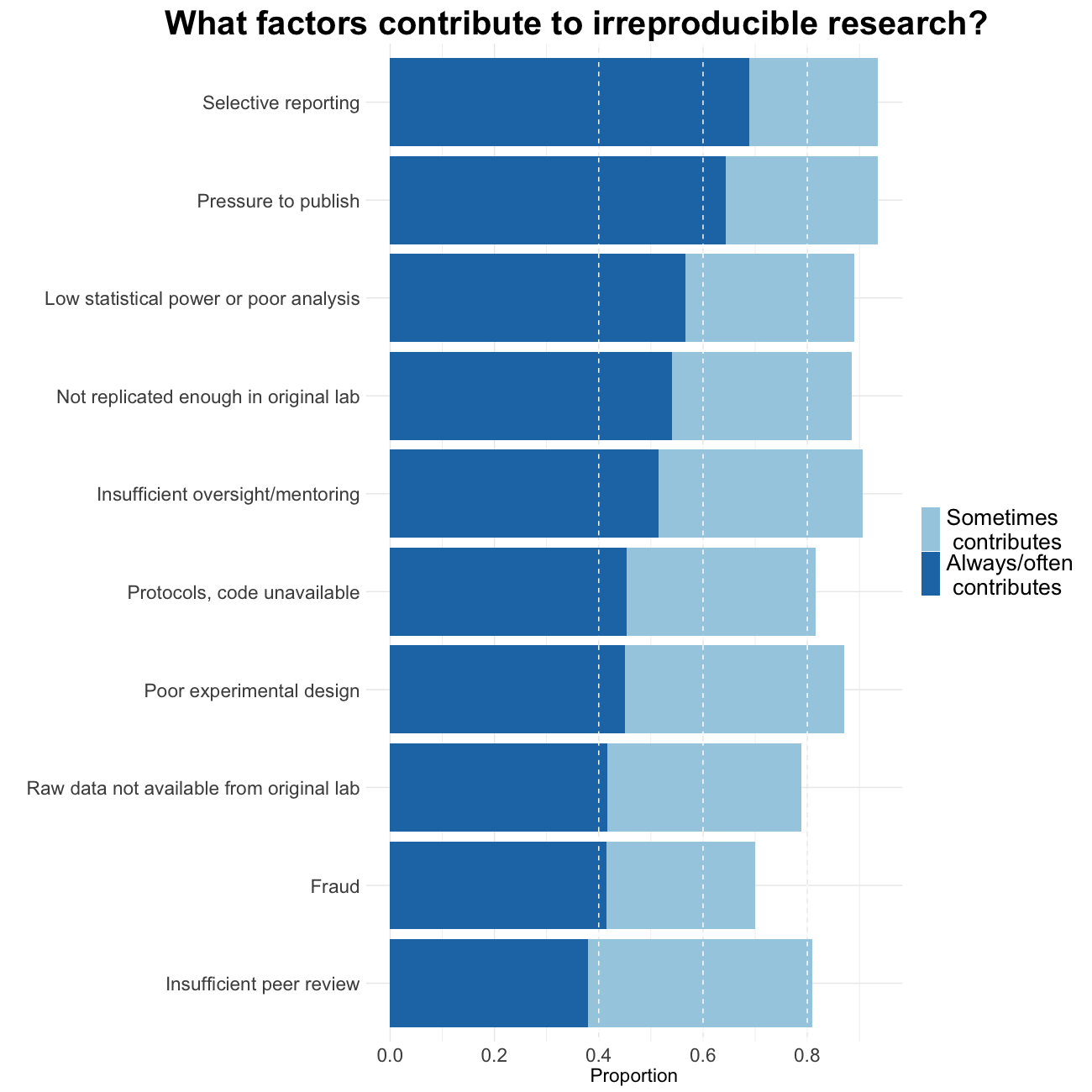

Baker also tried to evaluate which factors could contribute to this perceived reproducibility issue. Most researchers (more than 95%) believe that selective reporting and pressure to publish always/often or sometimes contribute to irreproducibility. Still about 90% believe that low statistical power or poor analysis, not enough replication in the original lab and insufficient mentoring/oversight always/often or sometimes contribute. Around 80% agree with unavailability of methods/code, poor experimental design, unavailability of raw data and unsufficient peer review as contributing factors at least sometimes. Fraud plays a more minor role in the opinion of researchers.

Image credit: Figures are reproduced from https://www.nature.com/articles/533452a with the data available on Figshare

Quiz on reproducibility/replicability

Effect size

Within the concerted replication efforts effect sizes of the replication attempts are on average (for one of them we do not have the information)

- smaller than the original effect

- approximately the same as the original effect

- bigger than the original effect

Solution

T smaller than the original effect

F approximately the same as the original effect F bigger than the original effect

Factors contributing to irreproducibility

Peeking at the content below, with which of the above factors that contribute to irreproducible research is the current episode of this course concerned?

Solution

Methods, code unavailable

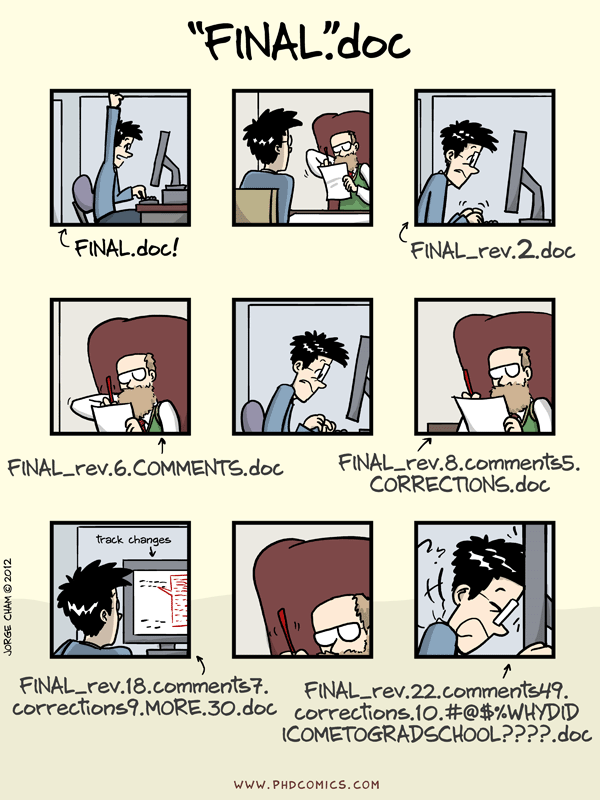

2. Organization and software

In this section we learn about simple tools to avoid the fear in Markowetz’ selfish reason number 4.

Selfish reason number 4: reproducibility enables continuity of your work

“I did this analysis 6 months ago. Of course I can’t remember all the details after such a long time.”

F Markowetz https://genomebiology.biomedcentral.com/articles/10.1186/s13059-015-0850-7

Project organization

The main principles of data analytic project organization is the separation of

- data

- method

- output

and the preservation of the - computational environment

Project organization checklist

To achieve these principles make sure that you follow a procedure similar to:

- Put each project in its own directory named after the project.

- Put text associated documents in the

docdirectory.- Put raw data and metadata in a

datadirectory and files generated during cleanup and analysis in aresultsdirectory.- Put project source code in the

srcdirectory.- Put external scripts or compiled programs in the

bindirectory.- Name all files to reflect their content or function.

From Good enough practices in scientific computing by G Wilson et al. https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1005510

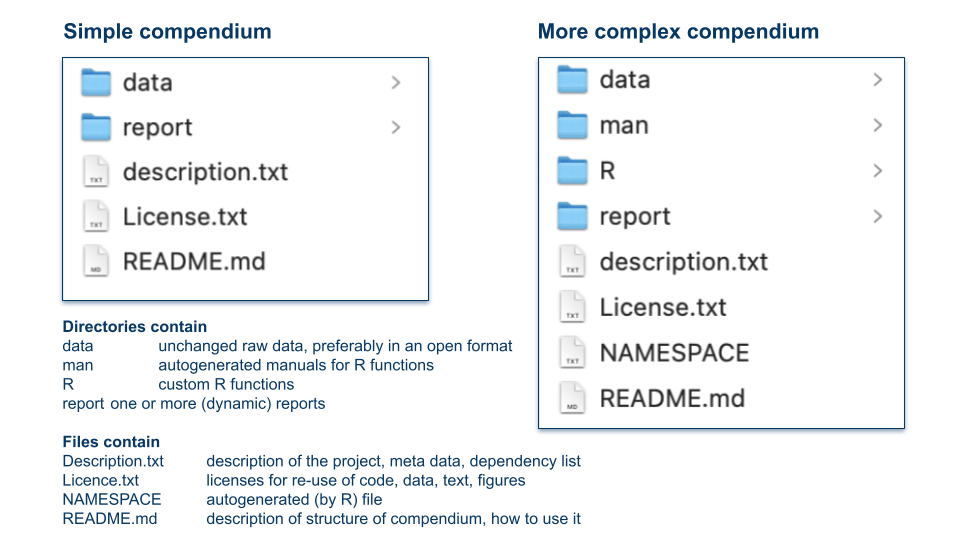

In Packaging Data Analytical Work Reproducibly Using R (and Friends) B Marwick et al. https://www.tandfonline.com/doi/full/10.1080/00031305.2017.1375986 suggest a slightly different but conceptually similar approach. They propose to organize projects as so-called “research compendia”, for example like:

Image credit: Illustration of research compendia as suggested in B. Marwick et al. by Eva Furrer, CC-BY, https://doi.org/10.5281/zenodo.7994355.

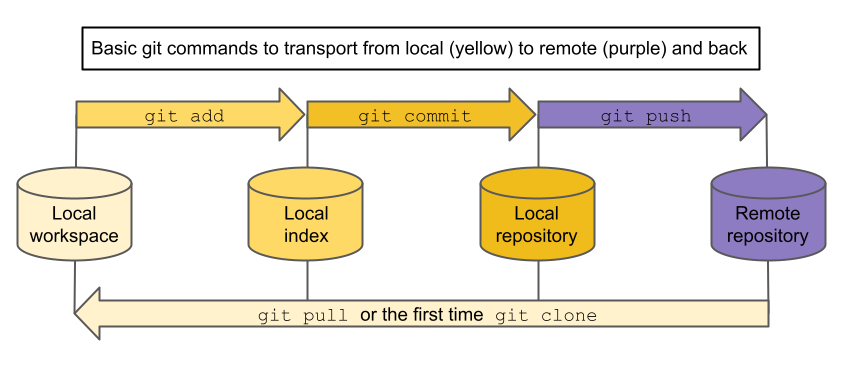

Software/code

Writing code for a data analysis instead of using a GUI based tool makes an analysis to some degree reproducible (given the availability of the data and the analogous functioning of the computing environment). But code can also be a very detailed documentation of the employed methods, at least if it is written in a way such that it is understandable.

Selfish reason number 3: reproducibility helps reviewers see it your way

“One of the reviewers proposed a slight change to some analyses, and because he had access to the complete analysis, he could directly try out his ideas on our data and see how the results changed.”

F Markowetz https://genomebiology.biomedcentral.com/articles/10.1186/s13059-015-0850-7

Code understandability checklist

Use the following principles that make code easier to understand and use by others and your future self

- Place a brief explanatory comment at the start of every program.

- Decompose programs into functions.

- Be ruthless about eliminating duplication.

- Search for well-maintained libraries that do what you need.

- Test libraries before relying on them.

- Give functions and variables meaningful names.

- Make dependencies and requirements explicit.

- Do not comment and uncomment code sections to control behavior.

- Provide a simple example or test data set.

⇒ Your main goal with these principles is for your code to be readable, reusable, testable

From G Wilson et al. https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1005510

On top of these high level recommendations writing and reading code is easier if one adheres to some styling rules. We have assembled our ten most important rules for code styling in R, these were influenced by https://style.tidyverse.org, https://google.github.io/styleguide/Rguide.html, https://cfss.uchicago.edu/notes/style-guide/ and by lot of experience in reading code by others and our past selves.

10 Rules for code styling (in R)

- Code styling is about readability not about correctness. The most important factor for readability is consistency which also increases writing efficiency.

- Use white space for readability, spaces around operators (e.g. +), after commas and before %>%, line breaks before each command and after each %>%.

- Control the length of your code lines to be about 80 characters. Short statements, even loops etc, can be a single line.

- Indent your code consistently, the preferred way of indentation are two spaces.

- Use concise and informative variable names, do not use spaces, link by underscore or use CamelCase. Avoid names, that are already used, e.g.,

mean,c.- Comment your code such that its structure is visible and findable (use code folding in RStudio).

- Do not use the equal sign for assignment in R, <- is the appropriate operator for this. Avoid right-hand assignment, ->, since it deteriorates readability.

- Curly braces are a crucial programming tool in R. The opening { should be the last character on the line, the closing } the first (and last) on the line.

- File naming is part of good programming style. Do not use spaces or non-standard characters, use consistent and informative names.

- Finally, do use the assistance provided by RStudio: command/control + i and shift + command/ctrl + A.

Quiz on organization and software

Duplication

Which of the following situations are meant by the principle “be ruthless about duplication”

- Copy-pasting code for several cases of the same type of calculation

- Several lines of code that are repeated at different locations in a larger script

- The duplication of statistical results with two approaches

- The same type of graph used for several cases

Solution

T Copy-pasting code for several cases of the same type of calculation

T Several lines of code that are repeated at different locations in a larger script

F The duplication of statistical results with two approaches

F The same type of graph used for several cases

Directories

Which directories would you use for cleaned data files of .csv format?

- results

- data

- doc

- results/cleaneddata

Solution

T results

F data

F doc

T results/cleaneddata

3. Data in spreadsheets

Selfish reason number 2: reproducibility makes it easier to write papers

“Transparency in your analysis makes writing papers much easier. For example, in a dynamic document (Box 1) all results automatically update when the data are changed. You can be confident your numbers, figures and tables are up-to-date. Additionally, transparent analyses are more engaging, more eyes can look over them and it is much easier to spot mistakes.”

F Markowetz https://genomebiology.biomedcentral.com/articles/10.1186/s13059-015-0850-7

Image credit: Randall Munroe/xkcd at https://xkcd.com/2180/ licensed as CC BY-NC.

Humor aside, spreadsheets have advantages and disadvantages, that can threaten reproducibility. But they are easy to use and so widespread that we better learn how to use them properly. And indeed data in spreadsheets can be organized in a way that favors reproducibility. We will summarize the recommendations of the article by K Broman and K Woo https://www.tandfonline.com/doi/full/10.1080/00031305.2017.1375989 into five checklists below. Broman and Woo promise that:

“By following this advice, researchers will create spreadsheets that are less error-prone, easier for computers to process, and easier to share with collaborators and the public. Spreadsheets that adhere to our recommendations will work well with the tidy tools and reproducible methods described elsewhere in this collection and will form the basis of a robust and reproducible analytic workflow.”

Spreadsheet consistency checklist

- Use consistent codes for categorical variables.

- Use a consistent fixed code for any missing values.

- Use consistent variable names.

- Use consistent subject identifiers.

- Use a consistent data layout in multiple files.

- Use consistent file names.

- Use a consistent format for all dates.

- Use consistent phrases in your notes.

- Be careful about extra spaces within cells.

Image credit: Image credit: copyright 2023, William F. Hertha under Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Choose good names for files and variables checklist

- No spaces

- Use underscores or hyphens or periods (only one of them)

- No special characters (&,*,%,ü,ä,ö,…)

- Use a unique, short but meaningful name

- Variable names have to start with a letter

- File names: include zero-padded version number, e.g. V013

- File names: include consistent date, e.g. YYYYMMDD

Be careful with dates checklist

- Use the ISO 8601 global standard

- Convention for dates in Excel is different on Windows and Mac computers

- Dates have an internal numerical representation

- Best to declare date columns as text, but only works prospectively

- Consider separate year, month, day columns

Image credit: Randall Munroe/xkcd at https://xkcd.com/1179/ licensed as CC BY-NC.

Image credit: Randall Munroe/xkcd at https://xkcd.com/1179/ licensed as CC BY-NC.

Make your data truly readable and rectangular checklist

- Put one information of the same form per cell

- Do not add remarks in cells which should contain numerical values, e.g. >10000

- Include one variable per column, one row per subject: a rectangle of data

- Use the first and only the first row for variable names

- Do not calculate means, standard deviations etc in the last row

- Do not color, highlight or merge cells to codify information

- Use data validation at data entry

- Be careful with commas since they may be decimal separators

- Consider write protecting a file at the end of data collection

Code book/data dictionary checklist

- Create a code book in a separate sheet or file

Code book contains

- a short description

- unit and max/min values for continuous variables

- all levels with their code for categorical variables

- ordering for ordinal variables

- All variables have to be contained in the code book

Quiz on data in spreadsheets

Variable names

What are good names for the variable containing average height per age class?

- averageheightperageclass

- av_height_agecls

- height/class

- av_height

Solution

F averageheightperageclass

T av_height_agecls

F height/class

F av_height

Ruthlessness

Choose how to best initialize the variables that contain the BMI (body mass index) of 17 subjects at three different time points.

- bmi1 <- numeric(17); bmi2 <- numeric(17); bmi3 <- numeric(17)

- bmi <- matrix(0, nrow=17, ncol=3)

- bmi <- NULL; ind <- c(0,0,0); for (i in 1:17) bmi <- rbind(bmi, ind)

Solution

F bmi1 <- numeric(17); bmi2 <- numeric(17); bmi3 <- numeric(17)

T bmi <- matrix(0, nrow=17, ncol=3)

F bmi <- NULL; ind <- c(0,0,0); for (i in 1:17) bmi <- rbind(bmi, ind)

Special care for dates

This episode was created on February 28, 2023. Enter this date as an 8-digit integer:

Solution

20230228

Once more dates

This episode was created on February 28, 2023. Enter this date in ISO 8601 coding:

Solution

2023-02-28

Missing values

Choose all acceptable codes for missing values.

- 99999

- -99999

- NA

- ‘empty cell’

- non detectable

Solution

F 99999

F -99999

T NA

T ‘empty cell’

F non detectable

Code styling

The preferred way of indenting code is

- a tab

- none

- two spaces

Solution

F a tab

F none

T two spaces

Episode challenge

Improve a spreadsheet in Excel

Considering the input on data in spreadsheets try to improve the spreadsheet

This spreadsheet contains data from 482 patients, two columns with dates and 8 columns with counts of two different markers in the blood on a baseline date, on day 1, 2 and 3 of a certain therapy.

Specifically you should check

- the plausibility of all observations (e.g. value in correct range)

- the correct and consistent format of the entries, e.g. spelling or encoding errors

- date formats

- the format of missing values

- variable names

- the overall layout of the spreadsheet (header, merged cells, entries that are not observations etc.)

Solution

No solution provided here.

Improve a spreadsheet in R

We continue to work on the spreadsheet trainingdata.xlsx. This time we use

Rto correct the same errors in the spreadsheet. Why do you think is it better to use R for this process?

Solution

No solution provided here.

Key Points

Well organized projects are easier to reproduce

Consistency is the most important principle for coding analyses and for preparing data

Transparency increases reliability and trust and also helps my future self

Facilitating reproducibility in academic publications

Overview

Teaching: 90 min

Exercises: 60-90 minQuestions

How does academic publishing work?

What is the IMRAD format?

What are reporting guidelines and why are they useful for reproducibility?

How can we judge the quality and credibility of a preprint

Objectives

Understand how the academic publishing process works

Know about IMRAD sections and detect content in articles efficiently

Find appropriate reporting guidelines and know their advantages

Review a preprint using a simple checklist

1. Primer on academic publishing

Why publish?

Results of research are published in the literature such that

- findings get disseminated

- other researchers can assess “what is known”

- findings can get synthesized into overall evidence

- evidence can inform policy

but also such that

- researchers can document their output

- researchers can be assessed for career advancement

- researchers can build a “reputation”

⇒ Publication advances science and the career of scientists

Where to publish?

Most scientific publications are in academic journals or books. Journals may be

- discipline specific or across several disciplines

- run by learned societies or by commercial publishers

- open or closed access

- have more or less “impact”

- existing for over 100 years or only a short time

- exist in print/online or only online

- “predatory”

There may be more than 30’000 journals publishing 2’000’000 articles, aka papers, per year.

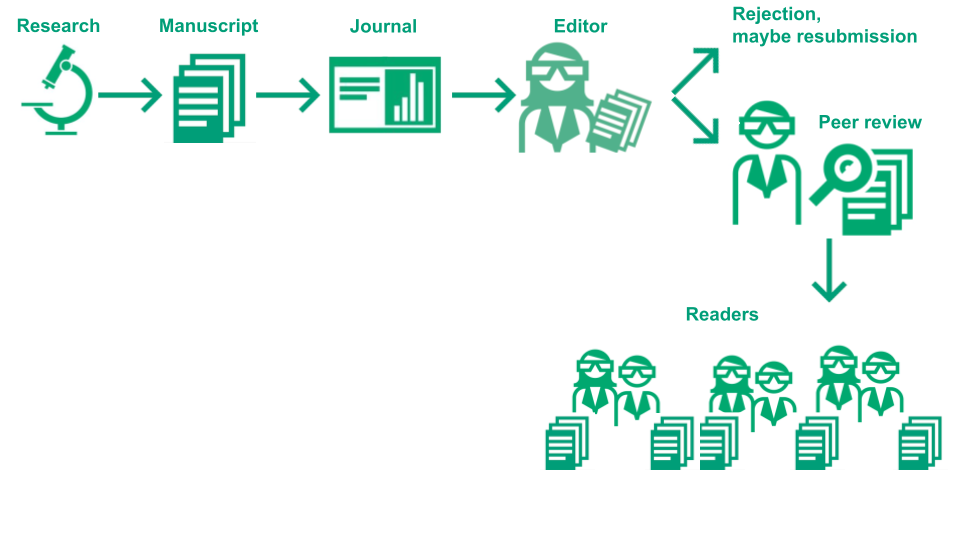

How does the process of publication in journals work?

Authors have to follow several steps, which are in general:

- Carry out a study or another type of research project, write an article, select a journal

- Submit the article to peer review at the journal

- The article will be assigned an editor and undergoes formal checks

- The editor decides if it will be peer-reviewed or rejected directly (desk-rejection)

- The editor searches peer reviewers, usually at least two independent and anonymous experts

- The article is peer-reviewed resulting in review reports

- The editor assesses the reports and makes a decision among:

- Rejection: the article cannot be published at this journal

- Revision: the article has to undergo changes, sometimes major, before publication

- Acceptance: the article can be published as it is, most often conditional on small cosmetic changes

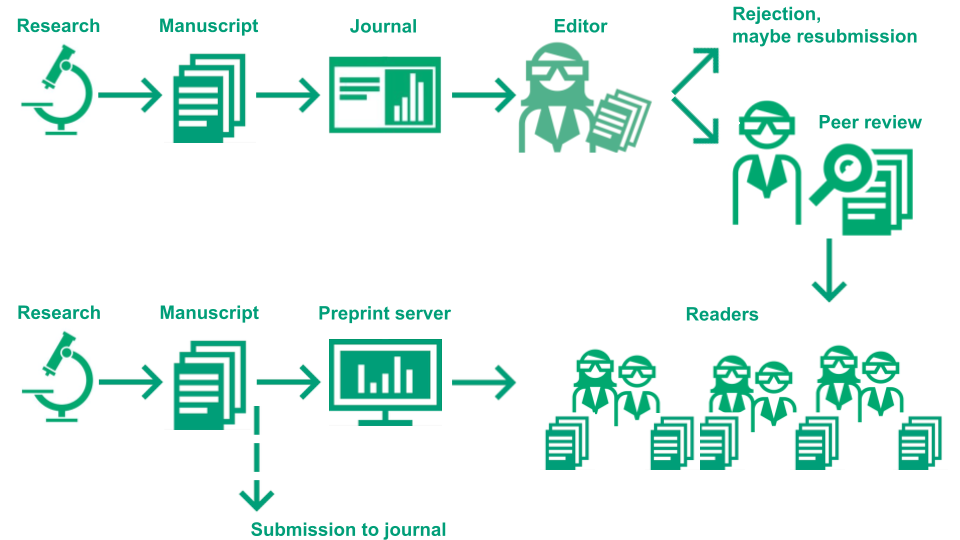

The below image illustrates this process:

Image Credit: (Part of) Illustration of the academic publication process by the Center for Reproducible Science (E. Furrer), CC-BY, https://doi.org/10.5281/zenodo.7994313.

What is a doi and what is Crossref?

Since the goal of scientific articles is to contribute to the advancement of science they need to be findable and identifiable for future work. For that a necessary condition is that they have a unique identifier, which is nowadays, of course, a digital identifier.

From Wikipedia:

“A digital object identifier (DOI) is a persistent identifier or handle used to identify objects uniquely, standardized by the International Organization for Standardization (ISO). An implementation of the Handle System, DOIs are in wide use mainly to identify academic, professional, and government information, such as journal articles, research reports, data sets, and official publications. DOIs have also been used, however, to identify other types of information resources, such as commercial videos.”

Since a DOI is a unique identifier you can find any article by concatenating https://doi.org/ and the DOI of the article and pasting it in the URL field of your browser. Try it out with 10.1186/s13059-015-0850-7. DOIs are issued by, for example, Crossref:

“Crossref (formerly styled CrossRef) is an official digital object identifier (DOI) Registration Agency of the International DOI Foundation. It is run by the Publishers International Linking Association Inc. (PILA) and was launched in early 2000 as a cooperative effort among publishers to enable persistent cross-publisher citation linking in online academic journals.”

Hence Crossref is the organisation which registers most doi for academic publications. (Source Wikipedia)

Indexing of journals/publications

Indexation of a journal, i.e. the inclusion of its articles in a meta data base, is considered a reflection of the quality of the journal. Indexed journals may be of higher scientific quality as compared to non-indexed journals. Examples of indexes are:

- Pubmed/MEDLINE https://pubmed.ncbi.nlm.nih.gov/

- Directory of Open Access Journals https://doaj.org/

- Thomson Reuters Journal Citation Reports https://clarivate.com/webofsciencegroup/solutions/journal-citation-reports

Many universities also have in-house databases for the articles produced by their researchers: at the University of Zurich, for example, this is ZORA https://www.zora.uzh.ch/

Why is peer review part of the publication process?

- Peer review allows to check and improve research.

- Peer review serves as scientific quality control.

- Peer review provides a form of self regulation for science.

- Peer review makes publication (more) trustworthy

Before you review for the first time see Open Reviewer Toolkit

Known issues of the process

Issues with peer review

- Anonymity of peer reviewers but not authors

- Conflict of interest of peer reviewers: plagiarism, delays, favouritism, biases

- Peer reviewers may not be competent enough

- Peer reviewers are volunteers and almost not rewarded

- The process is slow and unpredictable

- Increasing numbers of publications make it more and more unfeasible

Issues with the publication system

- Sensational results are privileged over solid but less sensational research

- Lacking equity, e.g. already published authors are given cumulatively more credit (Matthew effect)

- Expensive either in subscription fees in order to be able read a journal or processing charges to publish open to everyone

- Evaluation of researchers is publication based and this incentivises fast but not rigorous research (“publish or perish”)

Preprints

Preprints are a relatively new form of publication which helps to overcome some of the issues with peer review and with the publication system. See the extension of the above graphic including preprints in the publication process:

Image Credit: Illustration of the academic publication process by Eva Furrer, CC-BY, https://doi.org/10.5281/zenodo.7994313.

Image Credit: Illustration of the academic publication process by Eva Furrer, CC-BY, https://doi.org/10.5281/zenodo.7994313.

See also J Berg et al. for a comment on the introduction of preprints in Biology: https://www.science.org/doi/abs/10.1126/science.aaf9133.

Quiz on academic publishing

Peer review

Which statements are correct for the practice of peer review in academic publishing?

- peer review contributes to keep the quality of publications to high standard

- peer reviewers are financially rewarded for their contribution

- peer review may take a long time and its outcome does not always depend on the quality of a publication

- peer reviewers are always objective experts not pursuing their personal interest

- one publication is always peer reviewed by exactly one expert

Solution

T peer review contributes to keep the quality of publications to high standard

F peer reviewers are financially rewarded for their contribution

T peer review may take a long time and its outcome does not always depend on the quality of a publication

F peer reviewers are always objective experts not pursuing their personal interest

F one publication is always peer reviewed by exactly one expert

Unfairness

The publication system is unfair since authors from prestigious institutions or authors with already a lot of publication are privileged, for them it is easier to publish since editors and reviewers decide in their favor more often. Such a type of effect is not unique to academic publishing but occurs in different aspects of society.

A common name for this effect is:

Solution

Matthew effect

Preprints

Why do preprints help to overcome some of the issues with peer review and with the publication system?

Solution

Preprints avoid conflicts of interests of peer reviewers, allow certain and fast publication and are completely free of charge.

2. What is the IMRAD format?

What is IMRAD?

The acronym IMRAD stands for “Introduction, Methods, Results and Discussion”. IMRAD is a widespread format in the biomedical, natural and social science research literature for reports on empirical studies. It is a convenience to readers because they can easily find the specific information they may be looking for in an article. See the article of J Wu https://link.springer.com/article/10.1007/s10980-011-9674-3 for a quick overview illustration:

Image credit: Illustration of the IMRAD concept, by Eva Furrer, CC-BY, https://doi.org/10.5281/zenodo.7994280.

Image credit: Illustration of the IMRAD concept, by Eva Furrer, CC-BY, https://doi.org/10.5281/zenodo.7994280.

R Day writes about the history of scientific publication in his article, “The Origins of the Scientific Paper: The IMRAD Format”. He specifically mentions the scientific method and its cornerstone the principle of reproducibility of results. The IMRAD Format has been introduced in order to represent the steps of the scientific method.

“Eventually, in 1972, the IMRAD format became “standard” with the publication of the American National Standard for the preparation of scientific papers for written or oral presentation.”

R Day American Medical Writers Association, 1989, Vol 4, No 2., 16–18. This article is not easily obtainable online, potentially your library can obtain it for you. If this is not possible, please contact the authors of this lesson.

What is the scientific method?

The Center for Reproducible Science at the University of Zurich uses a simplified graphical representation of the scientific method in its communications:

Image credit: Illustrations of meta research and the research cycle by Luca Eusebio and Eva Furrer, CC-BY, https://doi.org/10.5281/zenodo.7994222.

Image credit: Illustrations of meta research and the research cycle by Luca Eusebio and Eva Furrer, CC-BY, https://doi.org/10.5281/zenodo.7994222.

“What is the Scientific Method?” is a philosophical question that we can not answer in full detail here and it may be one of the questions to which there is no single correct answer. We will use the Stanford Encyclopedia of Philosophy definition as a first approximation:

“Often, ‘the scientific method’ is presented in textbooks and educational web pages as a fixed four or five step procedure starting from observations and description of a phenomenon and progressing over formulation of a hypothesis which explains the phenomenon, designing and conducting experiments to test the hypothesis, analyzing the results, and ending with drawing a conclusion.”

https://plato.stanford.edu/entries/scientific-method/

This view coincides with a common approach to empirical research, even if it may be an oversimplification and a strong generalization, we assume an underlying scientific process for this lesson that is close to such an approach.

What should the IMRAD sections contain?

In 1997 the International Committee of Medical Journal Editors published “Uniform Requirements” on the structure of articles:

“The text of observational and experimental articles is usually (but not necessarily) divided into sections with the headings Introduction, Methods, Results, and Discussion. Long articles may need subheadings within some sections (especially the Results and Discussion sections) to clarify their content. Other types of articles, such as case reports, reviews, and editorials, are likely to need other formats. Authors should consult individual journals for further guidance.”

The Uniform Requirements have been updated in December 2021 and the most current version can be found here: http://www.icmje.org/about-icmje/faqs/icmje-recommendations/ The 1997 version of the requirements is avaliable here: https://www.icmje.org/recommendations/archives/1997_urm.pdf.

The document contains much more than advice on structuring a manuscript, e.g. authorship roles, peer review roles etc. Please read the chapter/section “Manuscript Sections” in one of the two versions in order to get an overview of the expected content of the IMRAD sections.

There is a long list of journals that state that they follow these requirements http://www.icmje.org/journals-following-the-icmje-recommendations/

Quiz on IMRAD

Cornerstone of the scientific method

Hippocrates is credited as the discoverer of the scientific method. But he did not clearly state its cornerstone. The cornerstone of the scientific method is the:

Solution

reproducibility of results

Introduction section

The introduction section in an article following the IMRAD structure should contain?

- a short overview over the data and main conclusions of the article

- the purpose/objective of the presented research

- a complete and detailed background of the wider research area

Solution

F a short overview over the data and main conclusions of the article

T the purpose/objective of the presented research

F a complete and detailed background of the wider research area

Methods section

The methods section in an article following the IMRAD structure should contain

- a descriptive analysis of the collected data such that appropriate methods can be chosen for the analysis

- enough information such that a reader would in theory be able to reproduce the results

- only information that was available before data collection

Solution

F a descriptive analysis of the collected data such that appropriate methods can be chosen for the analysis

T enough information such that a reader would in theory be able to reproduce the results

T only information that was available before data collection

Statistical methods

The statistical methods subsection of the methods section in an article following the IMRAD structure should contain

- detailed information software and packages

- only contain p-values an no effect sizes or estimates of the precision

- distinguish pre-specified parts of the analysis from parts that have been done in an explorative way after looking at the collected data

Solution

T detailed information software and packages

F only contain p-values an no effect sizes or estimates of the precision

T distinguish pre-specified parts of the analysis from parts that have been done in an explorative way after looking at the collected data

Discussion section

The discussion section in an article following the IMRAD structure should contain

- limitations of the study

- those conclusions in view of the goals of the study that are supported by the results

- a detailed summary of all results

Solution

T limitations of the study

T those conclusions in view of the goals of the study that are supported by the results

F a detailed summary of all results

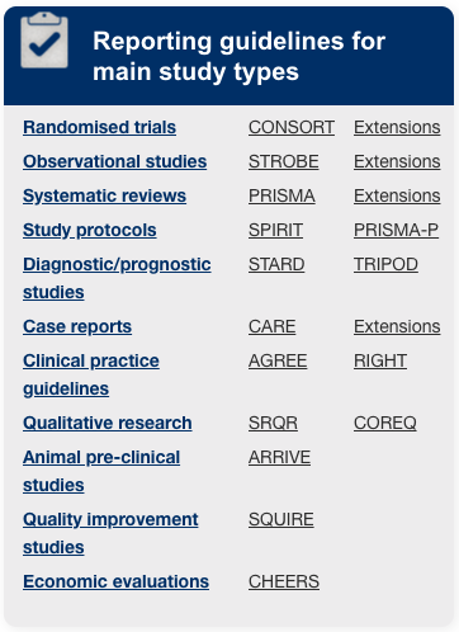

3. Reporting guidelines

Reporting guidelines are checklists that are based on wide agreement in a field providing more detailed guidance on the contents of IMRAD section.

Goals of reporting guidelines

The goals of Reporting Guidelines are summarized in I Simera and D Altman https://onlinelibrary.wiley.com/doi/full/10.1111/ijcp.12168. They summarize some key principles for responsible reserach reporting:

“Researchers should present their results clearly, honestly, and without fabrication, falsification or inappropriate data manipulation.”

“Researchers should strive to describe their methods clearly and unambiguously so that their findings can be confirmed by others.”

“Researchers should follow applicable reporting guidelines. Publications should provide sufficient detail to permit experiments to be repeated by other researchers.”

Good reporting is an ethical imperative

The WMA Declaration of Helsinki – Ethical Principles for Medical Research Involving Human Subjects states:

“Researchers, authors, sponsors, editors and publishers all have ethical obligations with regard to the publication and dissemination of the results of research. Researchers have a duty to make publicly available the results of their research on human subjects and are accountable for the completeness and accuracy of their reports. All parties should adhere to accepted guidelines for ethical reporting. Negative and inconclusive as well as positive results must be published or otherwise made publicly available. […]”

Good reporting is required by many journals

For example the Reporting requirements of the Nature Research journals aim to improve the transparency of reporting and reproducibility of published results across all areas of science. Before peer review, the corresponding author must complete an editorial policy checklist to ensure compliance with Nature Research editorial policies; where relevant, manuscripts sent for review must include completed reporting summary documents.

Nature portfolio Reporting Summary https://www.nature.com/documents/nr-reporting-summary-flat.pdf Nature Reporting requirements and reproducibility editorials https://www.nature.com/nature-portfolio/editorial-policies/reporting-standards#editorials

Database of reporting guidelines

“The EQUATOR (Enhancing the QUAlity and Transparency Of health Research) Network is an international initiative that seeks to improve the reliability and value of published health research literature by promoting transparent and accurate reporting and wider use of robust reporting guidelines.”

“It is the first coordinated attempt to tackle the problems of inadequate reporting systematically and on a global scale; it advances the work done by individual groups over the last 15 years.”

http://www.equator-network.org/reporting-guidelines/

The MDAR framework

“We were motivated to develop the MDAR Framework as part of our own and others’ attempts to improve reporting to drive research improvement and ultimately greater trust in science. Existing tools, such as the ARRIVE guidelines, guidance from FAIRSharing, and the EQUATOR Network, speak to important sub-elements of biomedical research. This new MDAR Framework aims to be more general and less deep, and therefore complements these important specialist guidelines.”

M McLeod et al. https://www.pnas.org/content/118/17/e2103238118

Other examples of reporting guidelines

M Michel et al. http://dmd.aspetjournals.org/content/dmd/48/1/64.full.pdf

T Hartung et al.https://www.altex.org/index.php/altex/article/view/1229

S Cruz Rivera et al. https://www.nature.com/articles/s41591-020-1037-7.pdf

M Appelbaum https://psycnet.apa.org/fulltext/2018-00750-002.html

⇒ also available for qualitative and mixed methods

R Poldrack et al. https://www.sciencedirect.com/science/article/pii/S1053811907011020?via%3Dihub

L Riek https://dl.acm.org/doi/pdf/10.5898/JHRI.1.1.Riek

Benefits of reporting guidelines

Benefits for researchers

Guidelines helps at protocol stage, e.g. with examples how to reduce the risk of bias

Useful reminder of all necessary details at writing stage, especially for junior researchers

Appropriate reporting allows the replication or inclusion in meta research projects

Adherence increases chances of article acceptance at journals

Benefits for peer reviewers

Peer review is an important step but limited guidance is available

Key issues and methods that should be covered in an article can be found in reporting guideline

If journal requests a completed checklist approach even easier

Criticism can be justified by pointing to reporting guideline (or their explanation documents)

But not a guarantee for a high quality study

Example: reporting of methods

“t-tests were used for comparisons of continuous variables and Fisher’s Exact test or Chi-squared test (where appropriate) were used for comparisons of binary variables”

versus

“The primary outcome, time to […], was analysed using a two-sample Wilcoxon rank-sum test. The secondary outcomes of […] were analysed using the Chi square and Fisher’s exact test, respectively, and the secondary outcome of time to […] was analysed using a two- sample Wilcoxon rank-sum test. All analyses were carried out on a per protocol basis using [software version]”

courtesy of M. Schussel of the Equator Network

Quiz on reporting guidelines

Reporting guidelines

Reporting guidelines are

- only used in biomedicine

- based on a wide consensus of experts

- mainly useful for the reader

Solution

F only used in biomedicine

T based on a wide consensus of experts

F mainly useful for the reader

JARS quiz 1

Look at the Journal Article Reporting Standards for Quantitative Research in Psychology: The APA Publications and Communications Board Task Force Report (JARS)

M Appelbaum et al. https://doi.apa.org/fulltext/2018-00750-002.htmlThe guideline suggests to to group all hypotheses, analyses, and conclusions into

- significant and non-significant

- primary, secondary, and exploratory

- novel, derived, and replication

Solution

F significant and non-significant

T primary, secondary, and exploratory

F novel, derived, and replication

JARS quiz 2

Look at the Journal Article Reporting Standards for Quantitative Research in Psychology: The APA Publications and Communications Board Task Force Report (JARS)

M Appelbaum et al. https://doi.apa.org/fulltext/2018-00750-002.htmlFor publications that report on new data collections regardless of research design the guideline includes information on:

- where to report on registration of the underlying study

- where to report on the availabililty of data

- where to report a manual of procedures allowing replication

Solution

T where to report on registration of the underlying study: mainly for clinical trials

T where to report on the availabililty of data: specifically only for meta analysis

T where to report a manual of procedures allowing replication: specifically for experimental studies

JARS quiz 3

Look at the Journal Article Reporting Standards for Quantitative Research in Psychology: The APA Publications and Communications Board Task Force Report (JARS)

M Appelbaum et al. https://doi.apa.org/fulltext/2018-00750-002.htmlIn the data diagnostics and analytic strategy sections the guideline suggest that information on the following be reported

- in which case to exclude the data of participants from the study at the analysis stage

- how to deal with missing data

- which precise inferential statistics procedure to use

Solution

T in which case to exclude the data of participants from the study at the analysis stage

T how to deal with missing data

F which precise inferential statistics procedure to use: the guideline mentions a strategy, i.e. not a single procedure; it also suggests that this is to be specified for each type of hypothesis

4. Quality and credibility of a preprint: the Precheck checklist

What is Markdown and why do we learn about it?

Markdown is a lightweight markup language for creating formatted text using a plain-text editor. The goal is an easy to write and and easy to read format, even as raw code. It is traditionally used for so-called readme files in software development and extensively as a tool to produce html code for websites. There are several flavors of the language that are used in different places but the basics are the same almost anywhere.

Since a file containing Markdown text only contains plain text and no binary information, it is a lightweight format. Moreover, changes in Markdown files are particularly easy to track.

For a reference sheet of the syntax, please see here: https://www.markdownguide.org/cheat-sheet/

We introduce Markdown here because it will be used in the following episodes of this course and we start to practice it while learning about and using the PRECHECK checklist.

Markdown

Use the online Markdown editor Dillinger to create your first Markdown document including a title, two numbered sections one containg an itemized list and the other a numbered list. Do also include bold and italics font. You can use whatever text you like if nothing else comes to mind you may simple use lorem ipsum

Solution

No solution provided here.

Introduction to PRECHECK

As we have already seen preprints are manuscripts describing scientific studies that have not been peer-reviewed, that is, checked for quality by an unbiased group of scientists in the same field.

Preprints are typically posted online on preprint servers (e.g. BioRxiv, MedRxiv, PsyRxiv) instead of scientific journals. Anyone can access and read preprints freely, but because they are not verified by the scientific community, they can be of lower quality, risking the spread of misinformation. When the COVID-19 pandemic started, a lack of understanding of preprints has led to low-quality research gaining popularity and even infiltrating public policy.

Inspired by such events, PRECHECK was created: a checklist to help assess the quality of preprints in psychology and medicine, and judge their credibility. This checklist was created with scientifically literate non-specialists in mind, such as students of medicine and psychology, and science journalists. The contents of PRECHECK are reproduced here with permission.

The checklist contains 4 items, see below or on the linked website. When using PRECHECK on a preprint read each item and the Why is this important? Section underneath each of them. Check if the preprint you are reading fulfills the item’s criteria - if yes, write down a yes for this item. In doing so use your knowledge of the IMRAD structure and smart searching on the website or the pdf.

Generally, the more “yes” on the checklist your preprint gets, the higher its quality, but this is only a superficial level of assessment. For a thorough, discriminative analysis of a preprint, you also need to consult the related Let’s dig deeper Sections underneath most items. When using the checklist, it is recommended that you have both the preprint itself as a pdf, and the webpage on the preprint server where the preprint was posted at hand. You can also check online whether the preprint has already been peer reviewed and published in a journal.

The checklist works best for studies with human subjects, using primary data (that the researchers collected themselves) or systematic reviews, meta-analyses and re-analyses of primary data. It is not ideally suited to simulation studies (where the data are computer-generated). In general, if the study sounds controversial, improbable, or too good to be true, we advise you to proceed with caution when reading the study and being especially critical.

The PRECHECK checklist

Below you find the checklist together with Why is this important? and Let’s dig deeper Sections. It can also be directly accessed in the Markdown PRECHECK checklist (without the Why is this important? Sections).

1. Research question

Is the research question/aim stated?

Why is this important?

A study cannot be done without a research question/aim. A clear and precise research question/aim is necessary for all later decisions on the design of the study. The research question/aim should ideally be part of the abstract and explained in more detail at the end of the introduction.

2. Study type

Is the study type mentioned in the title, abstract, introduction, or methods?

Why is this important?

For a study to be done well and to provide credible results, it has to be planned properly from the start, which includes deciding on the type of study that is best suited to address the research question/aim. There are various types of study (e.g., observational studies, randomised experiments, case studies, etc.), and knowing what type a study was can help to evaluate whether the study was good or not.

What is the study type?

Some common examples include:

observational studies - studies where the experimental conditions are not manipulated by the researcher and the data are collected as they become available. For example, surveying a large group of people about their symptoms is observational. So is collecting nasal swabs from all patients in a ward, without having allocated them to different pre-designed treatment groups. Analysing data from registries or records is also observational. For more information on what to look for in a preprint on a study of this type, please consult the relevant reporting guidelines: STROBE.

randomised experiments - studies where participants are randomly allocated to different pre-designed experimental conditions (these include Randomised controlled trials [RCTs]). For example, to test the effectiveness of a drug, patients in a ward can be randomly allocated to a group that receives the drug in question, and a group that receives standard treatment, and then followed up for signs of improvement. For more information on what to look for in a preprint on a study of this type, please consult the relevant reporting guidelines: CONSORT.

case studies - studies that report data from a single patient or a single group of patients. For more information on what to look for in a preprint on a study of this type, please consult the relevant reporting guidelines: CARE.

systematic reviews and meta-analyses - summaries of the findings of already existing, independent studies. For more information on what to look for in a preprint on a study of this type, please consult the relevant reporting guidelines: PRISMA.

Let’s dig deeper

If the study type is not explicitly stated, check whether you can identify the study type after reading the paper. Use the question below for guidance:

- Does the study pool the results from multiple previous studies? If yes, it falls in the category systematic review/meta-analysis.

- Does the study compare two or more experimenter-generated conditions or interventions in a randomised manner? If yes, it is a randomised experiment.

- Does the study explore the relationship between characteristics that were not experimenter-generated? If yes, then it is an observational study

- Does the study document one or multiple clinical cases? If yes, it is a case study.

3. Transparency

a. Is a protocol, study plan, or registration of the study at hand mentioned?

b. Is data sharing mentioned? Mentioning any reasons against sharing also counts as a ‘yes’. Mentioning only that data will be shared “upon request” counts as a ‘no’.

c. Is materials sharing mentioned? Mentioning any reasons against sharing also counts as a ‘yes’. Mentioning only that materials will be shared “upon request” counts as a ‘no’.

d. Does the article contain an ethics approval statement (e.g., approval granted by institution, or no approval required)?

e. Have conflicts of interest been declared? Declaring that there were none also counts.

Why is this important?

Study protocols, plans, and registrations serve to define a study’s research question, sample, and data collection method. They are usually written before the study is conducted, thus preventing researchers from changing their hypotheses based on their results, which adds credibility. Some study types, like RCT’s, must be registered.

Sharing data and materials is good scientific practice which allows people to review what was done in the study, and to try to reproduce the results. Materials refer to the tools used to conduct the study, such as code, chemicals, tests, surveys, statistical software, etc. Sometimes, authors may state that data will be “available upon request”, or during review, but that does not guarantee that they will actually share the data when asked, or after the preprint is published.

Before studies are conducted, they must get approval from an ethical review board, which ensures that no harm will come to the study participants and that their rights will not be infringed. Studies that use previously collected data do not normally need ethical approval. Ethical approval statements are normally found in the methods section.

Researchers have to declare any conflicts of interest that may have biased the way they conducted their study. For example, the research was perhaps funded by a company that produces the treatment of interest, or the researcher has received payments from that company for consultancy work. If a conflict of interest has not been declared, or if a lack of conflict of interest was declared, but a researcher’s affiliation matches with an intervention used in the study (e.g., the company that produces the drug that is found to be the most effective), that could indicate a potential conflict of interest, and a possible bias in the results. A careful check of the affiliation of the researchers can help identify potential conflicts of interest or other inconsistencies. Conflicts of interests should be declared in a dedicated section along with the contributions of each author to the paper.

Let’s dig deeper

a. Can you access the protocol/study plan (e.g., via number or hyperlink)

b. Can you access at least part of the data (e.g., via hyperlink, or on the preprint server). Not applicable in case of a valid reason for not sharing.

c. Can you access at least part of the materials (e.g., via hyperlink, or on the preprint server). Not applicable in case of a valid reason for not sharing.

d. Can the ethical approval be verified (e.g., by number). Not applicable if it is clear that no approval was needed.

By ‘access’, we mean whether you can look up and see the actual protocol, data, materials, and ethical approval. If you can, you can also look into whether it matches what is reported in the preprint.

4. Limitations

Are the limitations of the study addressed in the discussion/conclusion section?

Why is this important?

No research study is perfect, and it is important that researchers are transparent about the limitations of their own work. For example, many study designs cannot provide causal evidence, and some inadvertent biases in the design can skew results. Other studies are based on more or less plausible assumptions. Such issues should be discussed either in the Discussion, or even in a dedicated Limitations section.

Let’s dig deeper

Check for potential biases yourself. Here are some examples of potential sources of bias.

Check the study’s sample (methods section). Do the participants represent the target population? Testing a drug only on white male British smokers over 50 is probably not going to yield useful results for everyone living in the UK, for example. How many participants were there? There is no one-size-fits-all number of participants that makes a study good, but in general, the more participants, the stronger the evidence.

Was there a control group or control condition (e.g., placebo group or non-intervention condition)? If not, was there a reason? Having a control group helps to determine whether the treatment under investigation truly has an effect on an experimental group and reduces the possibility of making an erroneous conclusion. Not every study can have such controls though. Observational studies, for example, typically do not have a control group or condition, nor do case studies or reviews. If your preprint is on an observational study, case study, or review, this item may not apply.

Was there randomisation? That is, was the allocation of participants or groups of participants to experimental conditions done in a random way? If not, was there a reason? Randomisation is an excellent way to ensure that differences between treatment groups are due to treatment and not confounded by other factors. For example, if different treatments are given to patients based on their disease severity, and not at random, then the results could be due to either treatment effects or disease severity effects, or an interaction - we cannot know. However, some studies, like observational studies, case studies, or reviews, do not require randomisation. If your preprint is on an observational study, case study, or review, this item may not apply.

Was there blinding? Blinding means that some or all people involved in the study did not know how participants were assigned to experimental conditions. For example, if participants in a study do not know whether they are being administered a drug or a sham medication, the researchers can control for the placebo effect (people feeling better even after fake medication because of their expectation to get better). However, blinding is not always possible and cannot be applied in observational studies or reanalyses of existing non-blinded data, for example. If your preprint is on an observational study, case study, or review, this item may not apply).

Episode challenge

Use PRECHECK for two preprints

Question 1